Strategies for Integrating Generative AI in Engineering Education

Welcome!

Please take a moment to consider:

- What course would you most like to integrate AI tools into?

- What’s your biggest concern about using AI in your teaching?

Workshop Goals:

- Identify dimensions of AI integration in engineering education

- Analyze case studies using a structured framework

- Select appropriate AI approaches for your specific courses

- Begin developing an implementation plan

- Access resources for continued development

Workshop Schedule

- Part 1: Foundation (15 min)

- Introduction and AI landscape

- The AI Integration Taxonomy

- Part 2: Exploring the Taxonomy (40 min)

- Dimensions of AI integration

- Case examples across engineering disciplines

- Discussion of implementation approaches

- Part 3: Application & Planning (35 min)

- Case study analysis in small groups

- Individual implementation planning

- Next steps and resources

PART 1: FOUNDATION

The AI Landscape in Engineering Education

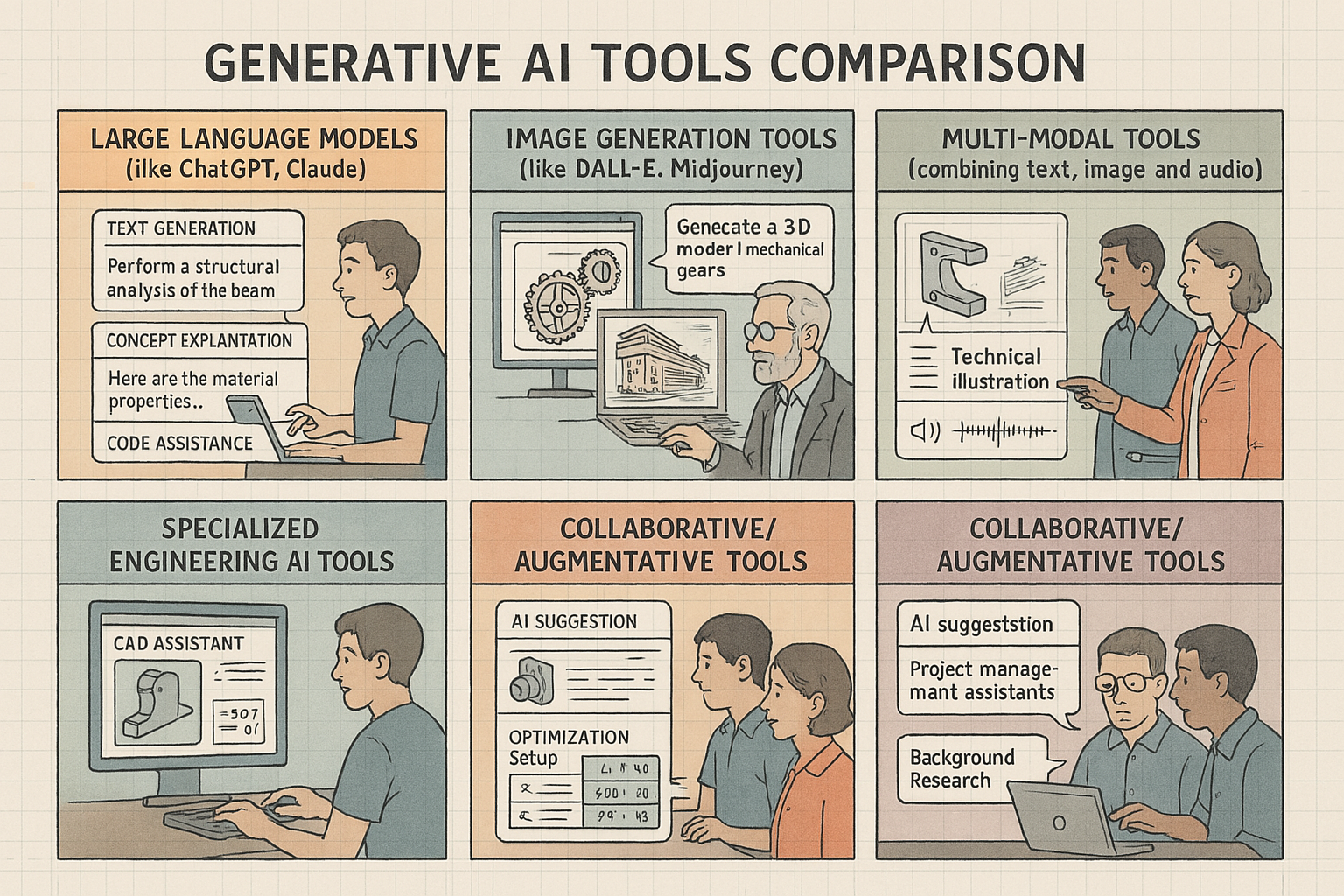

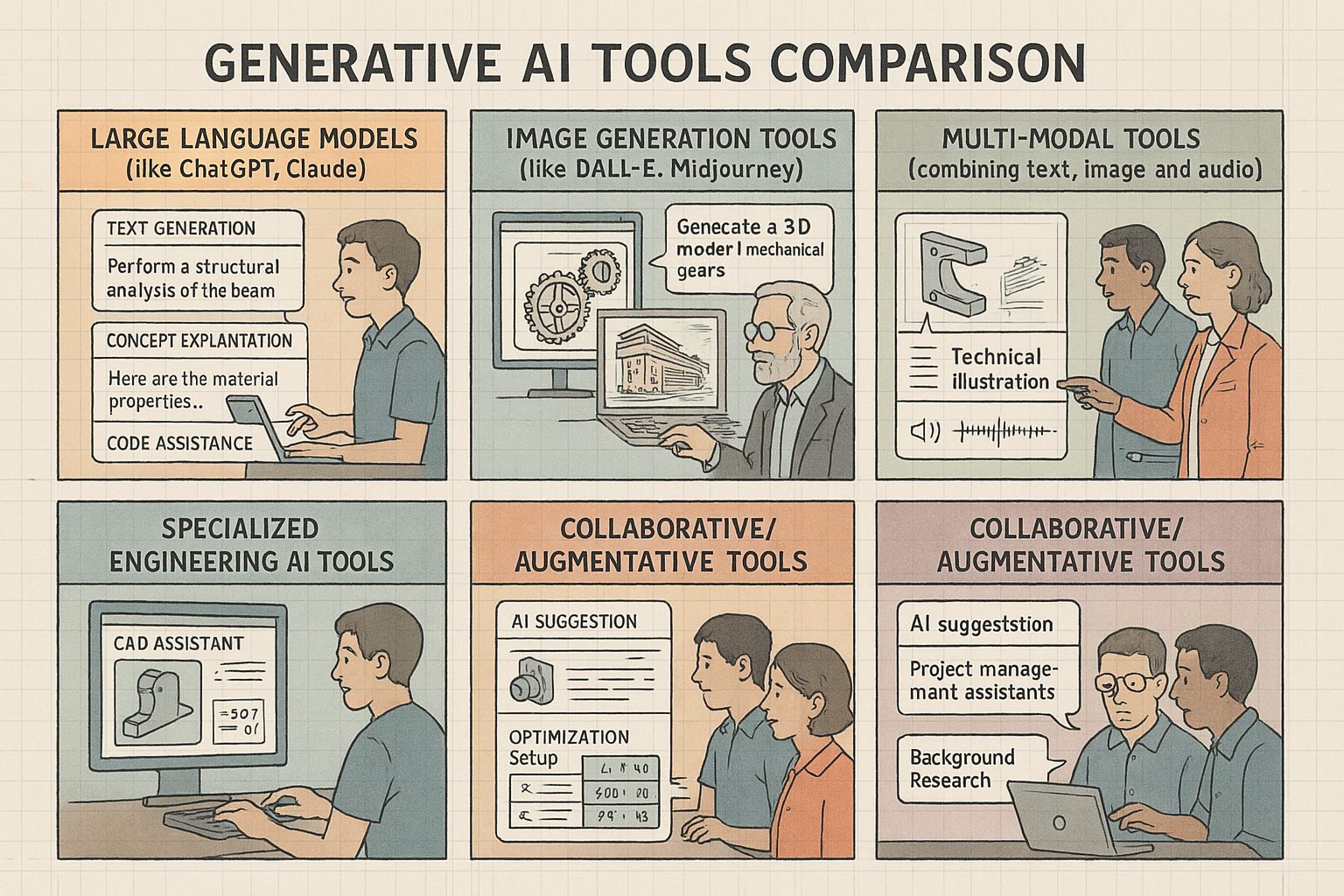

Generative AI Tools in Engineering

- Large Language Models (ChatGPT, Claude)

- Code Generation (GitHub Copilot)

- Image Generation (DALL-E, Midjourney)

- Speech Recognition (Whisper)

- Multimodal Tools (GPT-4V)

Engineering Education Challenges

- Technical domain knowledge

- Visualization of complex concepts

- Skill development vs. conceptual understanding

- Balancing theory and application

- Preparing for evolving professional practice

Students and professionals already using these tools

Opportunity to intentionally integrate rather than react

Why a Framework for Integration?

Ad hoc integration leads to:

- Inconsistent student experiences

- Missed pedagogical opportunities

- Assessment misalignment

- Potential equity issues

- Unclear expectations

A structured framework provides:

- Common language for discussing integration

- Multiple dimensions for consideration

- Intentional decision-making

- Alignment with educational goals

- Disciplinary adaptability

The AI Integration Taxonomy

Six dimensions to consider:

Pedagogical Purpose

Why integrate AI?Integration Depth

How deeply embedded?Student Agency

How much student control?

Assessment Alignment

How to evaluate learning?Technical Implementation

What technical aspects matter?Ethical & Professional

What broader implications?

This framework helps map the integration landscape and make intentional choices

PART 2: EXPLORING THE TAXONOMY

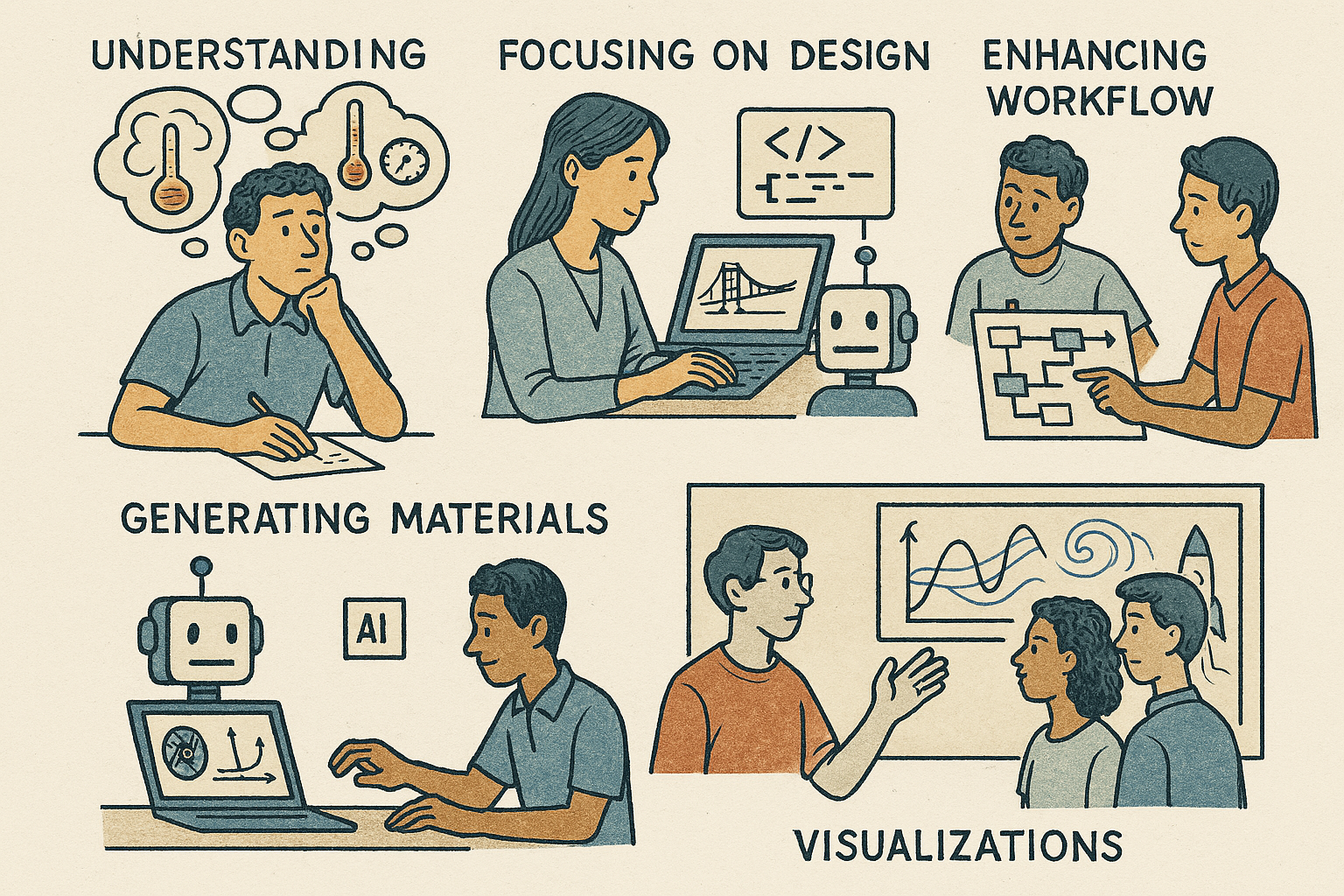

1. Pedagogical Purpose Dimension

Five primary purposes:

Conceptual Understanding

Explaining complex concepts, addressing misconceptionsSkill Development

Bypassing technical hurdles for higher-order skillsProcess Augmentation

Enhancing workflows and methodologiesContent Creation

Generating or transforming educational materialsVisualization

Helping visualize complex phenomena

Engineering Example:

In thermodynamics, students use ChatGPT to:

- Generate multiple explanations of entropy concepts

- Connect microscopic and macroscopic views

- Create conceptual comparisons between similar processes

- Identify and address common misconceptions

Purpose Dimension: Discussion

Small Group Discussion (3 minutes)

- What aspects of your courses are most challenging for students to understand?

- Which pedagogical purpose seems most valuable for your context?

- How might AI tools address these specific challenges?

Consider:

- Conceptually difficult topics

- Areas where students struggle with visualization

- Skills that require significant practice

- Content that needs multiple perspectives

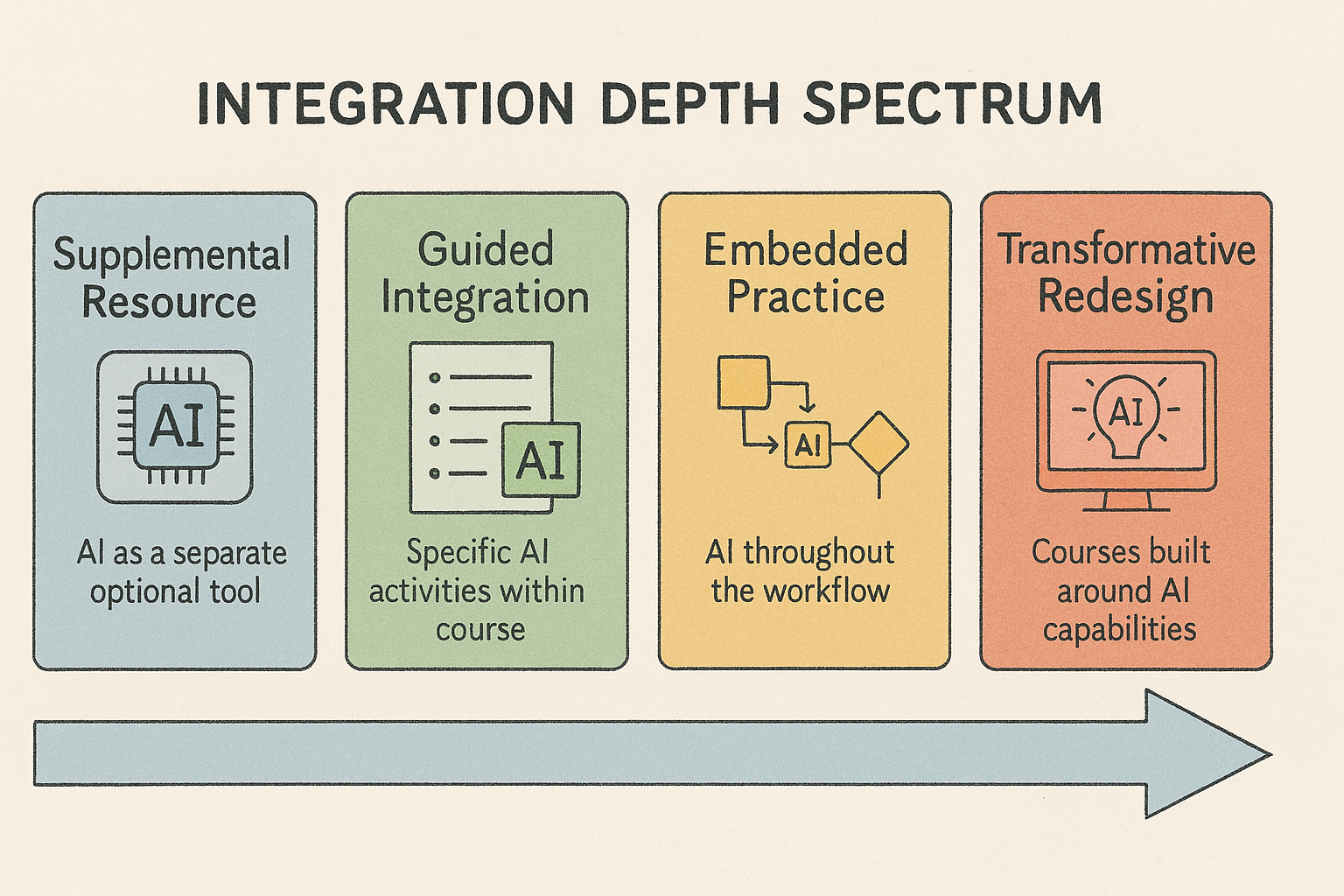

2. Integration Depth Dimension

Spectrum of integration:

Supplemental Resource

Optional tools outside core instructionGuided Integration

Structured prompts for specific activitiesEmbedded Practice

AI integrated throughout regular courseworkTransformative Redesign

Course restructured around AI capabilities

Engineering Example:

In data structures course with GitHub Copilot:

- Started as supplement for debugging

- Moved to guided exercises comparing manual and AI-assisted implementation

- Evolved to embedded practice with design-first approach

- Assessment redesigned to focus on algorithm design over syntax

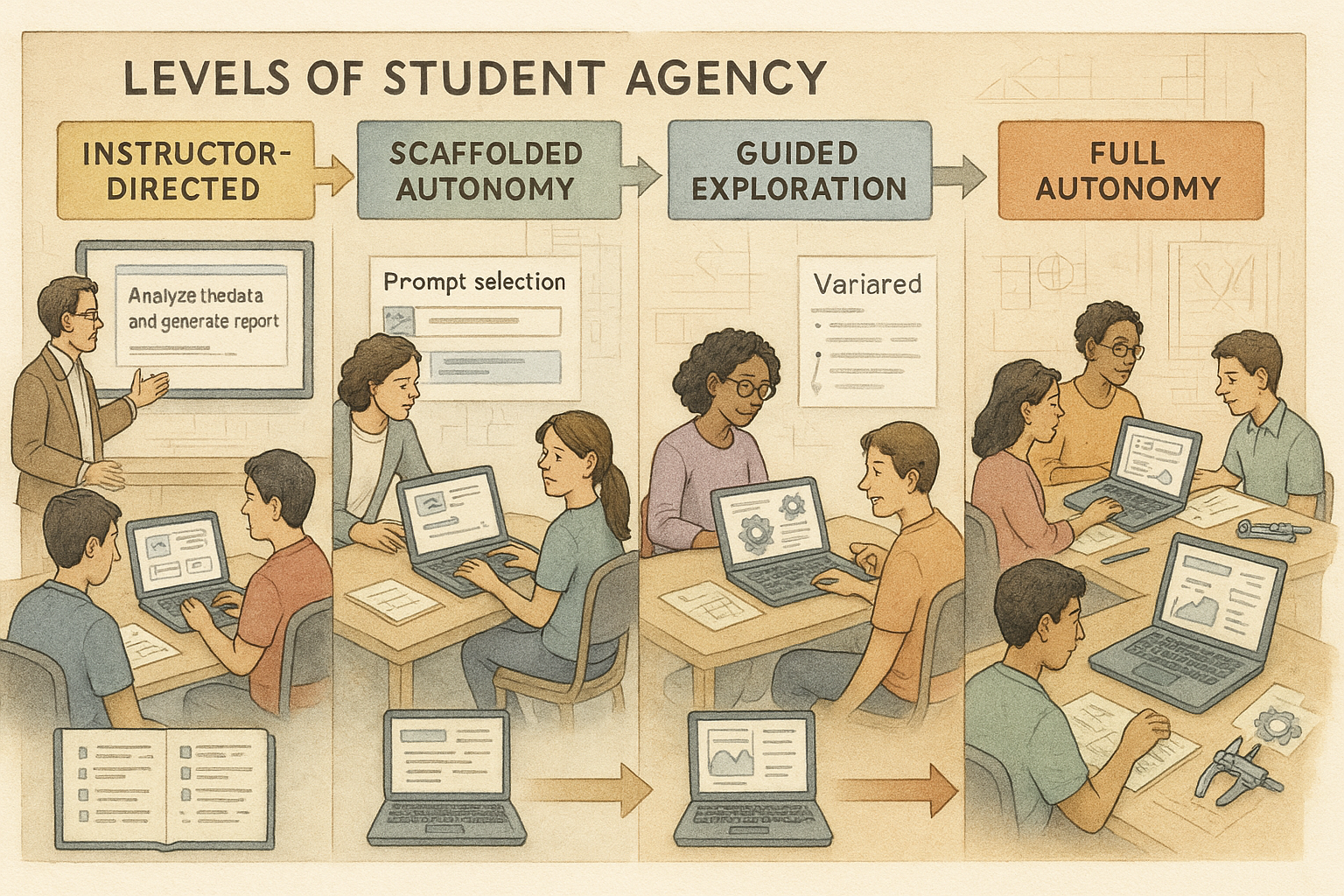

3. Student Agency Dimension

Levels of student choice and responsibility:

Instructor-Directed

Faculty provides specific prompts/toolsScaffolded Autonomy

Progressive responsibility with guidanceGuided Exploration

Students experiment within boundariesFull Autonomy

Independent decisions about AI use

Engineering Example:

In a materials science course:

- Began with specific instructor-provided prompts

- Gradually introduced template libraries students could modify

- Moved to student-created prompts with guidance

- Culminated in students determining when/how to use AI tools

Integration & Agency: Quick Poll

With a partner, take two minutes to discuss:

- Current integration depth in your courses

- Desired integration depth you’d like to achieve

- Student agency level you’d be comfortable with

Questions to consider:

- What barriers exist to deeper integration?

- What student preparation would be necessary?

- How might agency levels progress across a program?

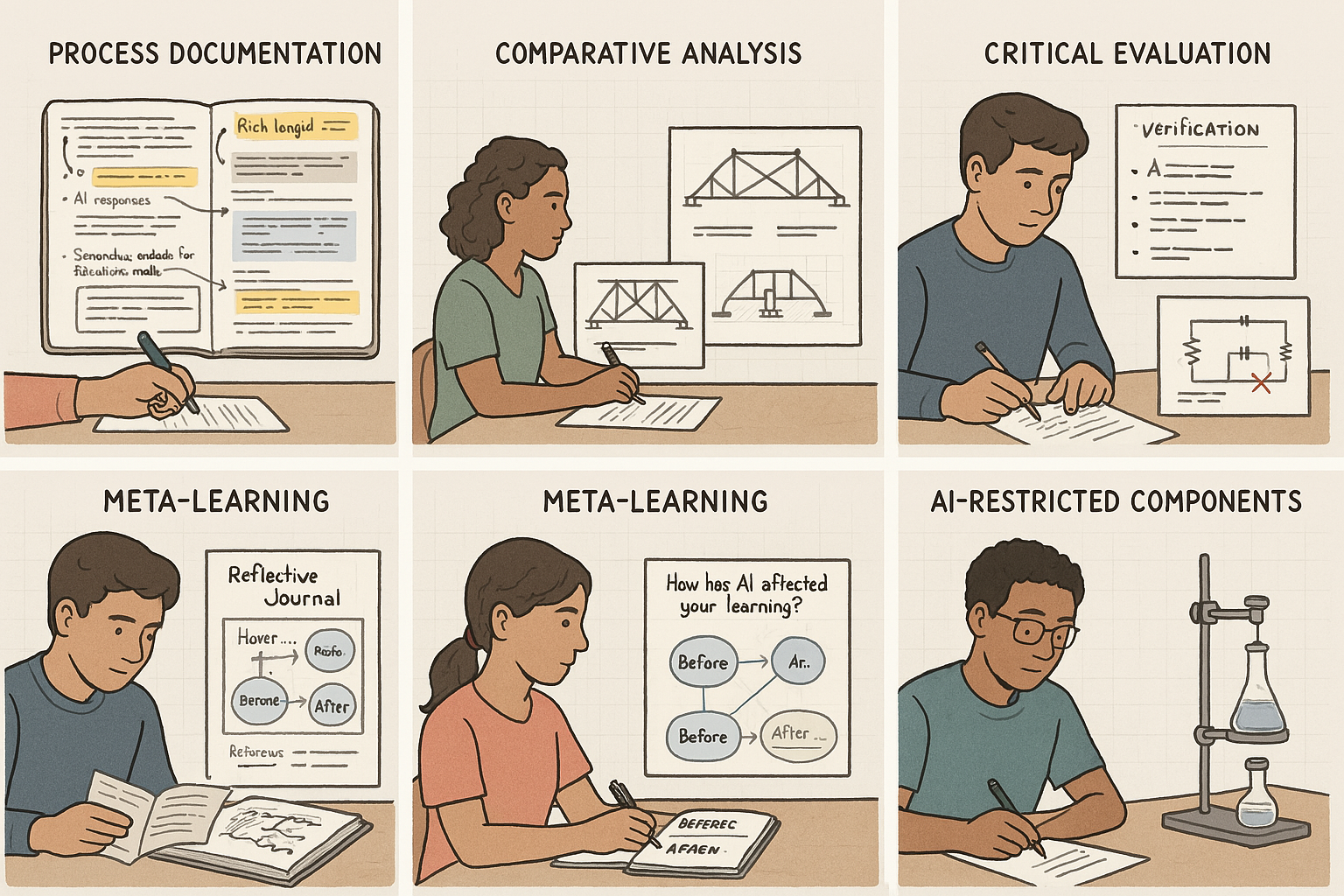

4. Assessment Alignment Dimension

Assessment approaches:

Process Documentation

Evaluating AI use in workflowComparative Analysis

Evaluating AI outputs vs. alternativesCritical Evaluation

Verifying and refining AI contributionsMeta-Learning

Reflection on learning with AIAI-Restricted Components

Some assessment without AI

Engineering Example:

In electrical engineering circuit design:

- Students document their prompting strategies

- Compare AI suggestions with manual calculations

- Identify and correct errors in AI recommendations

- Reflect on how AI affected their design process

- Still complete fundamental circuit analysis manually

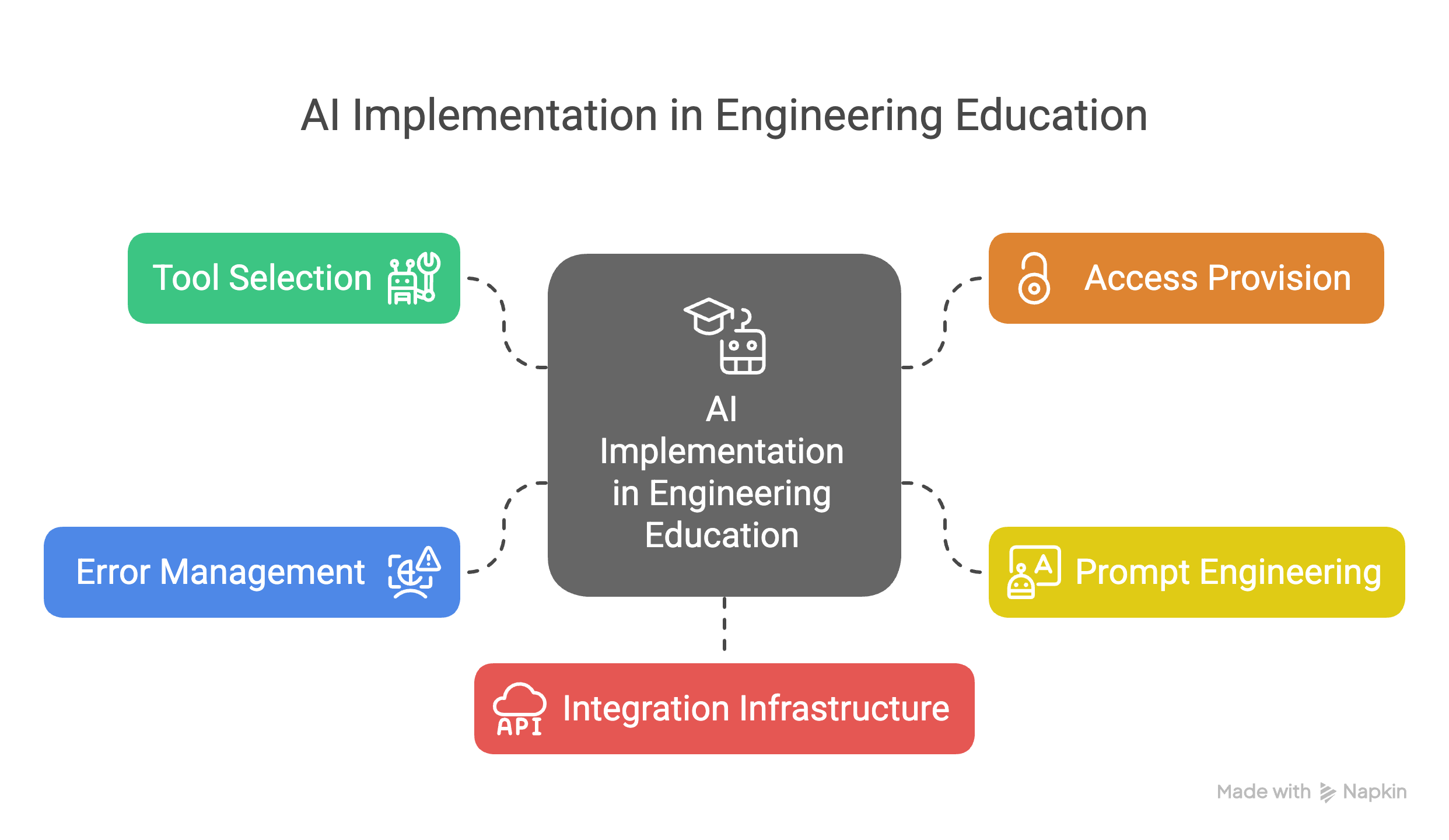

5. Technical Implementation Dimension

Implementation aspects:

Tool Selection

Matching capabilities to objectivesAccess Provision

Ensuring equitable student accessPrompt Engineering

Developing effective promptsError Management

Handling AI limitationsIntegration Infrastructure

Technical platforms for delivery

Engineering Examples:

- Civil engineering using Whisper for field note transcription

- Chemical engineering using DALL-E for safety visualization

- Mechanical engineering using ChatGPT for ideation

- Each tool selected for specific capabilities aligned with learning goals

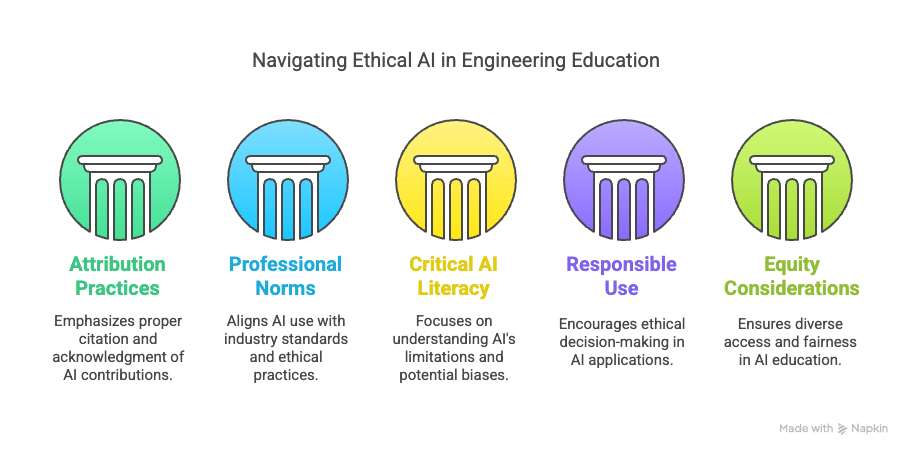

6. Ethical & Professional Development Dimension

Ethical aspects:

Attribution Practices

Citation of AI contributionsProfessional Norms

Alignment with industry practicesCritical AI Literacy

Understanding capabilities and limitationsResponsible Use

Ethical decision-makingEquity Considerations

Benefits reaching all students

Engineering Example:

Across disciplines:

- Developing AI contribution statements

- Consulting with industry on current practices

- Teaching systematic verification of AI outputs

- Discussing societal implications of AI in engineering

- Addressing varying levels of prior AI experience

Ethical & Professional: Brief Discussion

Brief Discussion

What ethical considerations are particularly important in your engineering discipline?

Consider:

- How is your industry using AI tools?

- What ethical concerns are specific to your field?

- How might AI use affect different student populations?

- What professional skills should students develop?

Implementation Examples by Discipline

We’ve created detailed example implementations that map to different positions on the taxonomy:

- Mechanical: Concept visualization in thermodynamics (Conceptual Understanding, Guided Integration)

- Electrical: Circuit analysis feedback (Skill Development, Embedded Practice)

- Civil: Structural design iterations (Process Augmentation, Transformative Redesign)

- Chemical: Safety visualization (Visualization, Scaffolded Autonomy)

- Computer Science: Algorithm development with Copilot (Skill Development, Guided Exploration)

- Cross-disciplinary: Technical writing feedback, Assessment redesign guide

These examples include detailed implementation steps, sample prompts, and assessment strategies

Prompt Engineering in Engineering Education

Key principles for effective prompts:

- Be specific about engineering context

- Include relevant technical parameters

- Clarify expected detail/technical level

- Request verification steps

- Include format specifications

- Consider iterative prompt chains

Example: From general to specific

❌ “Explain entropy”

✅ “Explain entropy from statistical mechanics perspective for junior-level thermodynamics students”

Sample prompt structures:

- Conceptual explanation prompt:

- Multiple perspective requests

- Connection to applications

- Misconception identification

- Technical verification prompt:

- Solution review with error identification

- Underlying principle explanation

- Alternative approach suggestion

- Design exploration prompt:

- Multiple solution generation

- Constraint-based evaluation

- Trade-off analysis

See examples directory for discipline-specific prompt templates

Assessment Redesign Principles

Moving beyond traditional assessment in AI-integrated courses:

- Assess the Process: Documentation of AI interactions, decision-making, verification

- Focus on Higher-Order Skills: Engineering judgment, critical evaluation, constraint analysis

- Maintain Knowledge Verification: Targeted components without AI assistance

- Balance Product and Process: Evaluate both outcomes and the methods used

- Clear Attribution Standards: Consistent guidelines for documenting AI contributions

Rubric elements should reward critical thinking about AI outputs, not just the final product quality. Good rubrics include evaluation of verification strategies and decision rationale.

See our comprehensive Assessment Redesign Guide for detailed rubrics and examples

Implementation Challenges & Solutions

Common Challenges:

- Varying student AI literacy levels

- Technical accuracy verification

- Equity of access to AI tools

- Balance of efficiency vs. learning

- Academic integrity considerations

- Rapid tool evolution

- Student overreliance on AI

Effective Solutions:

- Scaffolded introduction with baseline training

- Create verification protocols and checklists

- Provide institutional or classroom access

- Focus assessment on process and reflection

- Develop clear attribution guidelines

- Emphasize transferable skills beyond tools

- Design assignments requiring critical evaluation

Challenge intensity varies by integration depth and student agency level

PART 3: APPLICATION & PLANNING

Case Study Analysis Activity

Small Group Activity (15 minutes):

- Each group will analyze one case study using the taxonomy

- Use the Case Study Analysis Worksheet provided

- Map the case to each dimension of the taxonomy

- Identify key integration decisions and their rationale

- Discuss how similar approaches might apply to your contexts

- Select one key insight to share with the full group

Case Studies Available

Mechanical Engineering: ChatGPT for Design Ideation

Enhancing ideation while maintaining design decision ownershipElectrical Engineering: Claude for Circuit Analysis Feedback

Progressive circuit feedback with verification of technical accuracyCivil Engineering: Whisper for Accessible Materials

Automatic transcription enhancing access to field experienceChemical Engineering: DALL-E for Safety Visualization

Visualizing hazards and failure modes through image generationThermodynamics: ChatGPT for Concept Mastery

Multiple concept representations to deepen understandingData Structures: GitHub Copilot for Algorithm Implementation

Focusing on algorithm design patterns over syntax detailsMaterials Science: Claude for Multi-scale Understanding

Bridging nano, micro, and macro perspectives with AI explanations

Each case study maps to different positions along the taxonomy dimensions

Implementation Planning

Individual Work (15 minutes):

- Select one course for potential AI integration

- Complete the Implementation Planning Template

- Map current position on each taxonomy dimension

- Choose target positions that align with course goals

- Identify specific implementation steps for 1-2 priority dimensions

- Document anticipated challenges and resources needed

- Share plan with a partner for feedback (5 minutes)

Example Implementation Plan

Course: Thermodynamics II (Junior-level)

Current State: * No formal AI integration * Students using AI unofficially * Traditional problem-based assessment * Conceptual understanding challenges with entropy, availability, and multi-scale phenomena

Priority Dimensions: 1. Pedagogical Purpose (Conceptual Understanding) 2. Assessment Alignment (Process Documentation) 3. Student Agency (Scaffolded Autonomy)

Implementation Actions: 1. Create prompt library for thermodynamic concepts 2. Develop visual representation activities using image AI 3. Design concept mapping assignment with AI feedback 4. Implement verification protocols for AI explanations 5. Create process portfolio assessment structure 6. Pilot with entropy unit before full implementation

Resources Needed: * Example prompt collection for key concepts * Image generation tool access (DALL-E) * LMS integration for documentation * Assessment rubrics focused on concept mastery * Student guidance for critical AI evaluation

Based on the detailed example in our workshop materials

Key Takeaways

Intentional integration is key to effective AI use in engineering education

The taxonomy framework provides multiple dimensions to consider for implementation

Different dimensions may be prioritized based on specific course challenges and goals

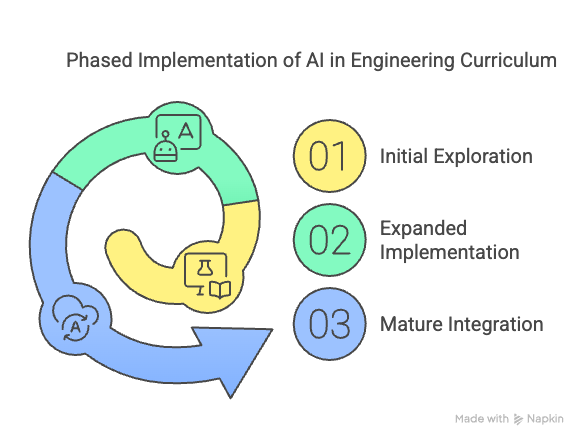

Progressive implementation often works better than complete redesign

Assessment alignment is critical for meaningful integration

Consider implications for student professional development

Resources Available

- Workshop Materials

- Additional Resources

- Expanded Case Studies by Discipline

- Prompt Libraries for Engineering Contexts

- Assessment Examples and Rubrics

- Implementation Planning Guides

Contact Information: Dr. Andrew Katz Email: [email protected]

Next Steps & Questions

Next Steps: 1. Complete your implementation plan 2. Identify one small action to take in the next month 3. Consider forming discipline-specific implementation groups 4. Explore additional resources provided

Questions & Discussion

What questions do you have about implementing AI in your engineering courses?

Thank you for your participation!