Thoughts on Measuring Information Density in Interviews

Introduction

Why care about information density in interviews (or other kinds of qualitative data, for that matter)? As researchers, educators, and just people who want to understand how information flows in human conversations more generally, we constantly navigate through conversations that vary dramatically in their information content. Some dialogues yield rich insights in mere minutes, while others meander for hours with minimal substance. This variation raises a question I wanted to explore: can we quantitatively measure the "density" of information in human dialogue? And more to the point, if we can, what can we do with that...information?

The ability to measure information density could help inform how we approach qualitative research, media analysis, and even educational assessment. Traditional approaches relying on human coding are subjective and resource-intensive. But recent advances in transformer-based autoregressive language models offer a promising (maybe? one way to find out!) new approach.

Using LLM Logits to Measure Information Density

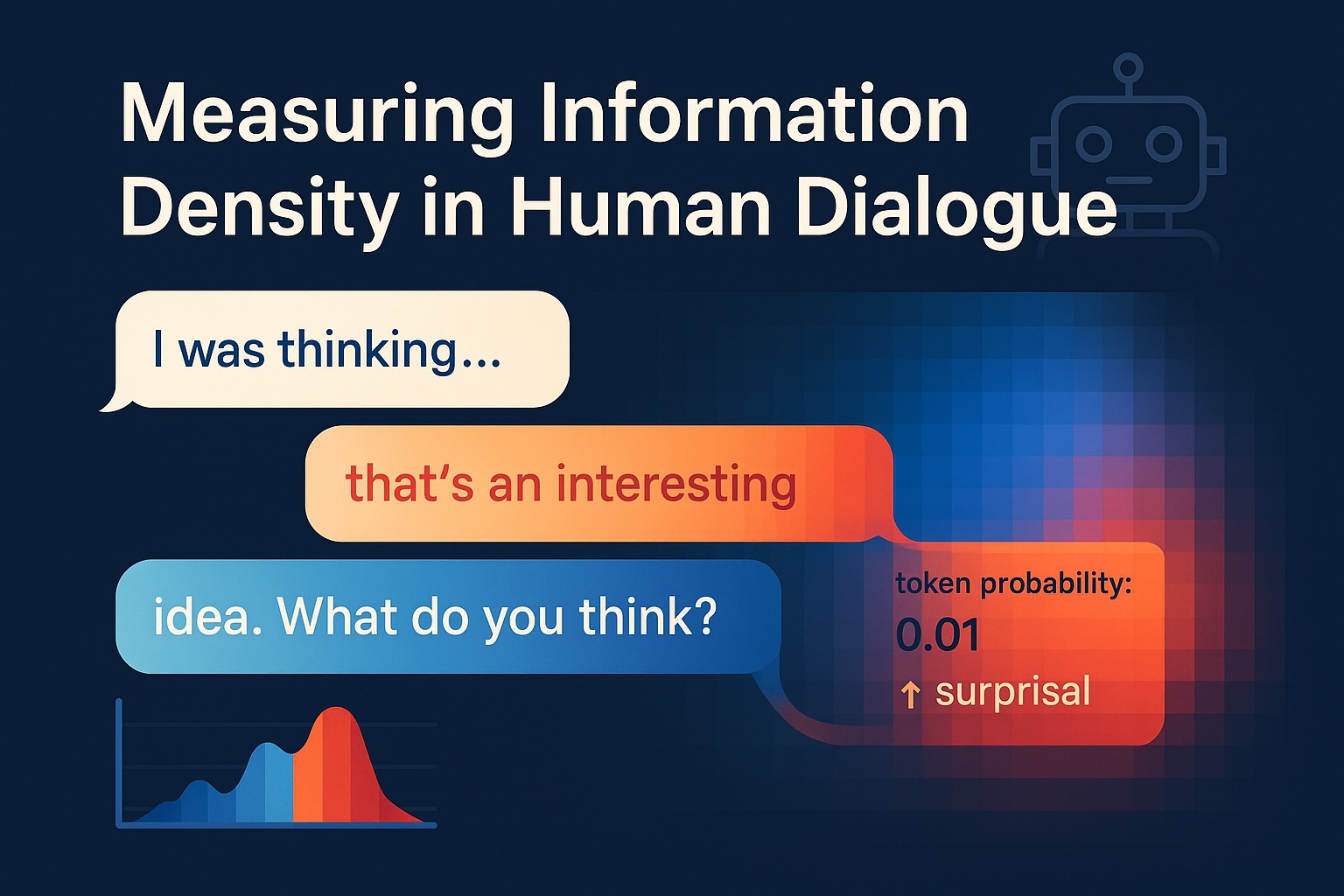

The core idea is simple enough: leverage the predictive mechanisms inside large language models to quantify information content. Here's the approach:

The Theoretical Foundation

In information theory, unpredictability correlates with information content. In part because I have a background in chemical engineering and environmental engineering (and information theory is not a topic covered in common curricula for those disciplines), I never had a formal introduction to information theory. Instead, I first came across the idea of information content by watching a series of lectures from David MacKay found here. In the first lecture, Mackay describes how Claude Shannon's work defined a message's information content as the amount of unpredictability in the message. The idea is that the more unpredictable a message is, the more information it contains. This principle applies directly to language: predictable statements carry less information than surprising ones.

Modern transformer-based LLMs like GPT-x, LLaMA-x, or Claude (not Shannon, but the language model from Anthropic!) operate by predicting the next token in a sequence. As part of this process, for each position, they output "logits" - essentially scores reflecting how likely each possible next token is. These logits encode the model's uncertainty about what comes next.

From Logits to Information Density

When a language model assigns low probability (reflected in smaller logits) to tokens that actually appear in text, it might suggest those tokens contain high information content - they're "surprising" to the model (and thus, the theory goes, to us). We can calculate this formally:

- Process an interview transcript through a pre-trained LLM

- For each token, extract the logit/probability the model assigned to it conditioned on the previous tokens

- Calculate the negative log probability for each token (this is the surprisal - another way to see this is to think of it as and realize that as , , indicating as something is certain, the surprisal is )

- Average these values over meaningful segments (sentences, turns, or topics)

The resulting metric gives us a quantitative measure of information density. Higher values indicate segments containing more unexpected information from the model's perspective.

Applications in Interview Analysis

One could imagine this approach enabling several powerful analyses:

Comparing Information Density Across Interviews

The hope is that we might be able to use this method to compare different interviews or segments within the same interview. For example, we might find that certain interview techniques consistently yield higher information density, or that specific topics generate more informative responses. It might also be a way to identify techniques that yield conversations with more or less information density.

Identifying Information-Rich Moments

By plotting information density over time within an interview, we can identify "hot spots" - moments where the conversation yielded particularly novel or unexpected information. These peaks often correspond to key insights or breakthrough moments.

Measuring Interviewer Effectiveness

The information density metric can help assess interviewer effectiveness. Does a particular interviewer consistently elicit high-density responses? Do certain question formulations tend to produce more informative answers?

Limitations and Considerations

As usual, while promising, nothing is flawless. This approach has important limitations:

-

Model Biases: The LLM's training data influences what it considers "surprising." Information that's novel to the model may not be novel to human experts.

-

Context Sensitivity: Information density depends on context. A statement might be highly informative in one context but trivial in another.

-

Qualitative Value: Not all surprises carry equal value. A genuinely insightful comment and a nonsensical statement might both yield high information density scores.

-

Domain Specificity: General-purpose LLMs may not accurately assess information density in specialized domains where terminology and concepts differ from typical language use.

Future Directions

Several refinements could enhance this approach:

- Fine-tuning models on domain-specific corpora to better assess information in specialized contexts

- Combining multiple models to get more robust measurements

- Incorporating human feedback to calibrate the system

- Developing interactive interfaces that highlight information-dense segments in real-time

So What?

Measuring information density via LLM logits offers a potentially useful approach for aspects of qualitative data analysis. By quantifying the informational content of conversations, we might be able to develop more effective interview techniques, identify key insights more efficiently, and ultimately extract more value from qualitative research.

This approach bridges information theory, cognitive science, and natural language processing in a way that could transform how we understand and analyze human conversations. As language models continue to improve, one would expect their utility as instruments for measuring the density of information in our exchanges to improve as well.